University of Toronto researcher Parham Aarabi has created an artificial intelligence model that mimics how people use e-commerce websites—and it may be able to help retailers optimize their sites for people experiencing color blindness and other conditions.

Called PRE, the AI-generated tool sees virtual users browse, pause on a page, add items to cart and click on discounted items.

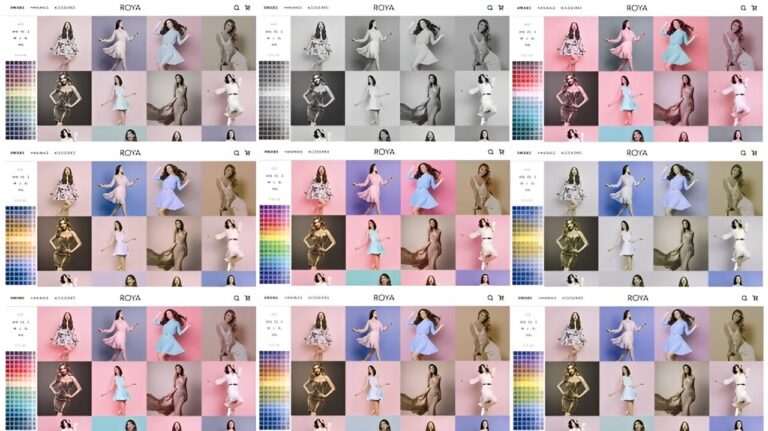

While the tool shows that users tend to be drawn to colorful images, Aarabi also wanted to see how those experiencing full and partial color blindness might respond.

“Around 8% to 10% of the population has a type of color-blindness,” says Aarabi, an associate professor in the Edward S. Rogers Sr. department of electrical and computer engineering in the Faculty of Applied Science & Engineering. “There are a number of ways the eye can be confused by color, commonly between red and green or blue and yellow.

“I wanted to see how this might impact web navigation.”

Aarabi set up an experiment. He altered a retail clothing website to simulate how it would appear to someone with protanomaly, or a reduced ability to perceive red light. One might think of it as applying a filter, or lens, which Aarabi then modified to approximate eight other variations of color deficiency.

For each variation, Aarabi initiated 1 million navigation sessions with AI virtual users and tracked the image click rates. He found that, in general, someone with color-blindness is 30% more likely than a color-abled user to click on a monochrome image. These results will be presented in a paper at the 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE EMBC 2024) this summer.

The boost factor that website designers count on with color doesn’t translate to everyone, notes Aarabi.

“When people are designing sites or presenting products, they need to stay cognizant that 8% of the population is not going to be impacted. You need to add better descriptions and more textual information to guide users through the shopping process.”

Aarabi sees this study as one of many that can benefit from PRE, whose neural net took two years to train with data from 110,000 real-life user sessions.

“To measure its accuracy, we set up a sample site and predicted what actions the AI virtual users will take—what percentage would add to cart, what percentage would buy a particular product, and so on—and also ran a test of the site with people,” says Aarabi. “PRE correctly mimics a human user’s actions 90% of the time.”

There are benefits with using AI virtual users for a study. One can run experiments more quickly, on a larger scale, and can recreate as many sessions as desired. The AI model eliminates the need, for example, to locate and coordinate many thousands of willing color-blind participants.

Aarabi has plans to use PRE to test other barriers to accessibility, such as dyslexia or motor impairments. His long-term goal is provide an auditing service for companies that allows them to test a web design’s impact on users with various conditions before or after launch.

Such goals are part of Aarabi’s research effort to mitigate negativity about AI.

“There’s a lot of worry, even within the tech community, about AI taking over or replacing us in some capacity,” he says. “If we can make AI more humanlike in some way, build in some empathy and have it mirror the reactions that humans have, we could dispel some of those concerns.”

“Professor Aarabi has been a pioneer in the application of AI, from past research cautioning against bias in training data sets to this current project, which uses the AI advantage to address accessibility issues,” says Professor Deepa Kundur, chair of the department of electrical and computer engineering. “Parham brings a valuable, forward-thinking approach to leveraging AI for positive outcomes.”

University of Toronto

Read the full article here